Computer vision technique to enhance 3D understanding of 2D images

Upon seeking at photographs and drawing on their previous experiences, people can frequently understand depth in photos that are, themselves, perfectly flat. Having said that, having desktops to do the similar thing has proved rather hard.

The difficulty is difficult for quite a few motives, just one remaining that information and facts is inevitably missing when a scene that normally takes put in three dimensions is reduced to a two-dimensional (2D) illustration. There are some very well-proven strategies for recovering 3D facts from numerous 2D visuals, but they just about every have some limitations. A new strategy identified as “virtual correspondence,” which was made by scientists at MIT and other institutions, can get about some of these shortcomings and succeed in conditions in which common methodology falters.

The normal strategy, identified as “construction from motion,” is modeled on a crucial facet of human vision. Because our eyes are divided from just about every other, they each and every offer you marginally various sights of an object. A triangle can be shaped whose sides consist of the line phase connecting the two eyes, moreover the line segments connecting every single eye to a typical issue on the object in concern. Being aware of the angles in the triangle and the distance in between the eyes, it really is possible to identify the length to that level employing elementary geometry—although the human visible technique, of class, can make tough judgments about distance with no possessing to go by means of arduous trigonometric calculations. This identical standard idea—of triangulation or parallax views—has been exploited by astronomers for hundreds of years to determine the distance to faraway stars.

Triangulation is a vital ingredient of construction from motion. Suppose you have two images of an object—a sculpted figure of a rabbit, for instance—one taken from the left facet of the figure and the other from the proper. The very first move would be to come across factors or pixels on the rabbit’s floor that both equally photographs share. A researcher could go from there to establish the “poses” of the two cameras—the positions wherever the images ended up taken from and the way each digital camera was dealing with. Being aware of the length concerning the cameras and the way they ended up oriented, a single could then triangulate to work out the length to a picked point on the rabbit. And if plenty of prevalent points are discovered, it may well be probable to acquire a in depth sense of the object’s (or “rabbit’s”) total form.

Significant development has been manufactured with this technique, comments Wei-Chiu Ma, a Ph.D. college student in MIT’s Division of Electrical Engineering and Computer Science (EECS), “and people are now matching pixels with better and increased accuracy. So long as we can notice the very same issue, or points, throughout unique pictures, we can use present algorithms to figure out the relative positions among cameras.” But the strategy only functions if the two pictures have a big overlap. If the input pictures have very unique viewpoints—and for this reason comprise handful of, if any, factors in common—he adds, “the program may perhaps are unsuccessful.”

All through summer time 2020, Ma came up with a novel way of performing points that could significantly grow the achieve of framework from movement. MIT was shut at the time due to the pandemic, and Ma was property in Taiwan, enjoyable on the couch. When seeking at the palm of his hand and his fingertips in particular, it occurred to him that he could obviously photograph his fingernails, even even though they were being not seen to him.

https://www.youtube.com/check out?v=LSBz9-TibAM

That was the inspiration for the notion of digital correspondence, which Ma has subsequently pursued with his advisor, Antonio Torralba, an EECS professor and investigator at the Computer system Science and Synthetic Intelligence Laboratory, alongside with Anqi Joyce Yang and Raquel Urtasun of the University of Toronto and Shenlong Wang of the College of Illinois. “We want to integrate human awareness and reasoning into our existing 3D algorithms,” Ma suggests, the exact same reasoning that enabled him to search at his fingertips and conjure up fingernails on the other side—the facet he could not see.

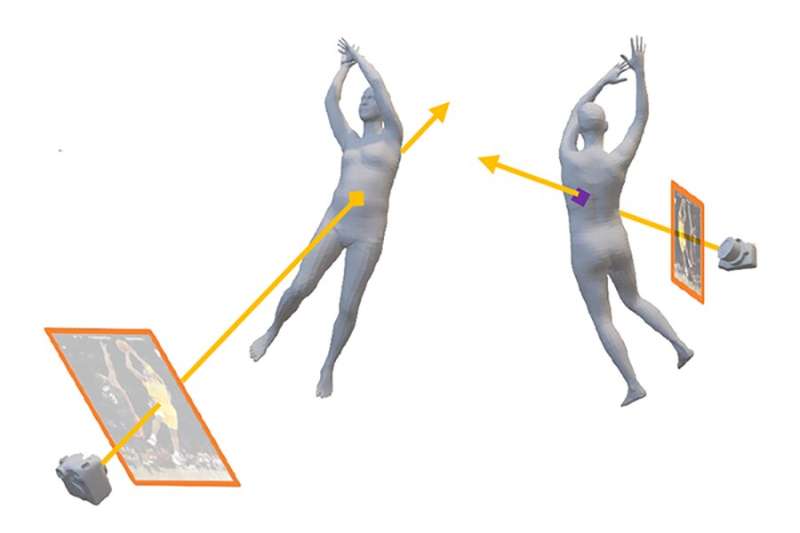

Structure from motion operates when two illustrations or photos have points in frequent, since that signifies a triangle can normally be drawn connecting the cameras to the common stage, and depth information can thus be gleaned from that. Virtual correspondence provides a way to have points more. Suppose, when again, that one picture is taken from the remaining aspect of a rabbit and another picture is taken from the right facet. The initial picture may possibly expose a location on the rabbit’s left leg. But because light travels in a straight line, just one could use general understanding of the rabbit’s anatomy to know exactly where a mild ray going from the digicam to the leg would emerge on the rabbit’s other facet. That stage may be noticeable in the other impression (taken from the right-hand aspect) and, if so, it could be made use of through triangulation to compute distances in the third dimension.

Virtual correspondence, in other phrases, will allow one particular to just take a place from the initially picture on the rabbit’s remaining flank and link it with a point on the rabbit’s unseen appropriate flank. “The edge below is that you don’t have to have overlapping photos to carry on,” Ma notes. “By on the lookout by the object and coming out the other close, this procedure delivers factors in typical to function with that were not initially out there.” And in that way, the constraints imposed on the traditional technique can be circumvented.

One particular could inquire as to how substantially prior know-how is needed for this to operate, because if you experienced to know the shape of anything in the graphic from the outset, no calculations would be required. The trick that Ma and his colleagues employ is to use selected acquainted objects in an image—such as the human form—to provide as a type of “anchor,” and they’ve devised procedures for making use of our know-how of the human condition to help pin down the digital camera poses and, in some instances, infer depth within just the image. In addition, Ma points out, “the prior know-how and typical feeling that is constructed into our algorithms is initially captured and encoded by neural networks.”

The team’s supreme target is much more formidable, Ma claims. “We want to make personal computers that can realize the 3-dimensional planet just like people do.” That objective is nonetheless much from realization, he acknowledges. “But to go beyond where by we are nowadays, and establish a technique that acts like individuals, we require a more hard location. In other text, we need to have to produce computer systems that can not only interpret continue to pictures but can also comprehend brief video clips and at some point full-length motion pictures.”

A scene in the movie “Great Will Searching” demonstrates what he has in mind. The viewers sees Matt Damon and Robin Williams from guiding, sitting down on a bench that overlooks a pond in Boston’s General public Backyard garden. The subsequent shot, taken from the opposite aspect, provides frontal (nevertheless thoroughly clothed) sights of Damon and Williams with an completely distinct background. Anyone observing the movie immediately understands they are seeing the exact same two folks, even though the two pictures have absolutely nothing in typical. Computers can’t make that conceptual leap nonetheless, but Ma and his colleagues are working challenging to make these machines extra adept and—at least when it will come to vision—more like us.

The team’s perform will be presented future week at the Meeting on Personal computer Vision and Pattern Recognition.

This tale is republished courtesy of MIT Information (world wide web.mit.edu/newsoffice/), a common web-site that covers information about MIT investigate, innovation and educating.

Citation:

Personal computer vision strategy to greatly enhance 3D being familiar with of 2D photos (2022, June 20)

retrieved 20 June 2022

from https://techxplore.com/information/2022-06-vision-approach-3d-2d-photos.html

This doc is matter to copyright. Apart from any fair dealing for the objective of private review or investigation, no

section may be reproduced without the need of the created permission. The articles is delivered for details reasons only.